Cartesia claims its AI is efficient enough to run pretty much anywhere

It’s becoming increasingly costly to develop and run AI. OpenAI’s AI operations costs could reach $7 billion this year, while Anthropic’s CEO recently suggested that models costing over $10 billion could arrive soon.

So the hunt is on for ways to make AI cheaper.

Some researchers are focusing on techniques to optimize existing model architectures — i.e. the structure and components that make models tick. Others are developing new architectures they believe have a better shot of scaling up affordably.

Karan Goel is in the latter camp. At the startup he helped co-found, Cartesia, Goel’s working on what he calls state space models (SSMs), a newer, highly efficient model architecture that can handle large amounts of data — text, images, and so on — at once.

“We believe new model architectures are necessary to build truly useful AI models,” Goel told TechCrunch. “The AI industry is a competitive space, both commercial and open source, and building the best model is crucial to success.”

Academic roots

Before joining Cartesia, Goel was a PhD candidate in Stanford’s AI lab, where he worked under the supervision of computer scientist Christopher Ré, among others. While at Stanford, Goel met Albert Gu, a fellow PhD candidate in the lab, and the two sketched out what would become the SSM.

Goel eventually took part-time jobs at Snorkel AI, then Salesforce, while Gu became assistant professor at Carnegie Mellon. But Gu and Goel went on studying SSMs, releasing several pivotal research papers on the architecture.

In 2023, Gu and Goel — along with two of their former Stanford peers, Arjun Desai and Brandon Yang — decided to join forces to launch Cartesia to commercialize their research.

Cartesia, whose founding team also includes Ré, is behind many derivatives of Mamba, perhaps the most popular SSM today. Gu and Princeton professor Tri Dao started Mamba as an open research project last December, and continue to refine it through subsequent releases.

Cartesia builds on top of Mamba in addition to training its own SSMs. Like all SSMs, Cartesia’s give AI something like a working memory, making the models faster — and potentially more efficient — in how they draw on computing power.

SSMs vs. transformers

Most AI apps today, from ChatGPT to Sora, are powered by models with a transformer architecture. As a transformer processes data, it adds entries to something called a “hidden state” to “remember” what it processed. For instance, if the model is working its way through a book, the hidden state values might be representations of words in the book.

The hidden state is part of the reason transformers are so powerful. But it’s also the cause of their inefficiency. To “say” even a single word about a book a transformer just ingested, the model would have to scan through its entire hidden state — a task as computationally demanding as rereading the whole book.

In contrast, SSMs compress every prior data point into a sort of summary of everything they’ve seen before. As new data streams in, the model’s “state” gets updated, and the SSM discards most previous data.

The result? SSMs can handle large amounts of data while outperforming transformers on certain data generation tasks. With inference costs going the way they are, that’s an attractive proposition indeed.

Ethical concerns

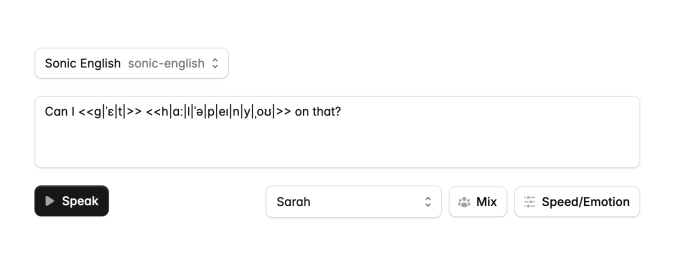

Cartesia operates like a community research lab, developing SSMs in partnership with outside organizations as well as in-house. Sonic, the company’s latest project, is an SSM that can clone a person’s voice or generate a new voice and adjust the tone and cadence in the recording.

Goel claims that Sonic, which is available through an API and web dashboard, is the fastest model in its class. “Sonic is a demonstration of how SSMs excel on long-context data, like audio, while maintaining the highest performance bar when it comes to stability and accuracy,” he said.

While Cartesia has managed to ship products quickly, it’s stumbled into many of the same ethical pitfalls that’ve plagued other AI model-makers.

Cartesia trained at least some of its SSMs on The Pile, an opendata set known to contain unlicensed copyrighted books. Many AI companies argue that fair-use doctrine shields them from infringement claims. But that hasn’t stopped authors from suing Meta and Microsoft, plus others, for allegedly training models on The Pile.

And Cartesia has few apparent safeguards for its Sonic-powered voice cloner. A few weeks back, I was able to create a clone of Vice President Kamala Harris’ voice using campaign speeches (listen below). Cartesia’s tool only requires that you check a box indicating that you’ll abide by the startup’s ToS.

Cartesia isn’t necessarily worse in this regard than other voice cloning tools on the market. With reports of voice clones beating bank security checks, however, the optics aren’t amazing.

Goel wouldn’t say Cartesia is no longer training models on The Pile. But he did address the moderation issues, telling TechCrunch that Cartesia has “automated and manual review” systems in place and is “working on systems for voice verification and watermarking.”

“We have dedicated teams testing for aspects like technical performance, misuse, and bias,” Goel said. “We’re also establishing partnerships with external auditors to provide additional independent verification of our models’ safety and reliability … We recognize this is an ongoing process that requires constant refinement.”

After this story was published, a PR rep for Cartesia said via email that the company is “no longer training models on The Pile.”

Budding business

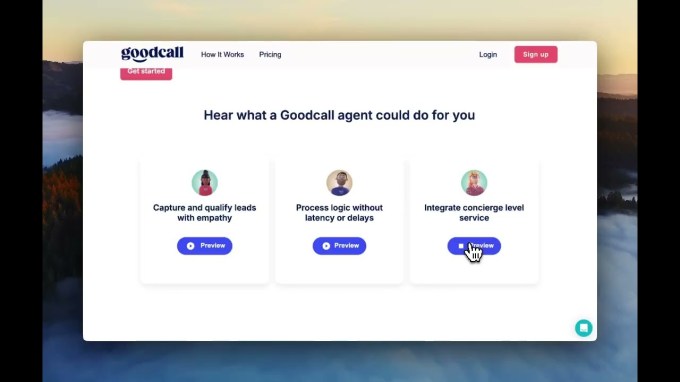

Goel says that “thousands” of customers are paying for Sonic API access, Cartesia’s primary line of revenue, including automated calling app Goodcall. Cartesia’s API is free for up to 100,000 characters read aloud, with the most expensive plan topping out at $299 per month for 8 million characters. (Cartesia also offers an enterprise tier with dedicated support and custom limits.)

By default, Cartesia uses customer data to improve its products — a not-unheard-of policy, but one unlikely to sit well with privacy-conscious users. Goel notes that users can opt out if they wish, and that Cartesia offers custom retention policies for larger orgs.

Cartesia’s data practices don’t appear to be hurting business, for what it’s worth — at least not while Cartesia has a technical advantage. Goodcall CEO Bob Summers says that he chose Sonic because it was the only voice generation model with a latency under 90 milliseconds.

“[It] outperformed its next best alternative by a factor of four,” Summers added.

Today, Sonic’s being used for gaming, voice dubbing, and more. But Goel thinks it’s only scratching the surface of what SSMs can do.

His vision is models that run on any device and understand and generate any modality of data — text, images, videos, and so on — almost instantly. In a small step toward this, Cartesia this summer launched a beta of Sonic On-Device, a version of Sonic optimized to run on phones and other mobile devices for applications like real-time translation.

Alongside Sonic On-Device, Cartesia published Edge, a software library to optimize SSMs for different hardware configurations, and Rene, a compact language model.

“We have a big, long-term vision of becoming the go-to multimodal foundation model for every device,” Goel said. “Our long-term roadmap includes developing multimodal AI models, with the goal of creating real-time intelligence that can reason over massive contexts.”

If that’s to come to pass, Cartesia will have to convince potential new clients its architecture is worth suffering the learning curve. It’ll also have to stay ahead of other vendors experimenting with alternatives to the transformer.

Startups Zephyra, Mistral, and AI21 Labs have trained hybrid Mamba-based models. Elsewhere, Liquid AI, led by robotics luminary Daniela Rus, is developing its own architecture.

Goel asserts that 26-employee Cartesia is positioned for success, though — thanks in part to a new cash infusion. The company this month closed a $22 million funding round led by Index Ventures, bringing Cartesia’s total raised to $27 million.

Shardul Shah, partner at Index Ventures, sees Cartesia’s tech one day driving apps for customer service, sales and marketing, robotics, security, and more.

“By challenging the traditional reliance on transformer-based architectures, Cartesia has unlocked new ways to build real-time, cost-effective, and scalable AI applications,” he said. “The market is demanding faster, more efficient models that can run anywhere — from data centers to devices. Cartesia’s technology is uniquely poised to deliver on this promise and drive the next wave of AI innovation.”

A* Capital, Conviction, General Catalyst, Lightspeed, and SV Angel also participated in San Francisco-based Cartesia’s latest funding round.

TechCrunch has an AI-focused newsletter! Sign up here to get it in your inbox every Wednesday.

Source: https://techcrunch.com